Complete Guide to Qwen3-TTS: Open Source Voice Cloning and Speech Generation in 2025

What is Qwen3-TTS and Why It's Revolutionary for Voice Technology

Qwen3-TTS represents a significant leap forward in open source text to speech technology, leveraging a transformer-based neural architecture that rivals and often surpasses commercial solutions like Amazon Polly or Google Cloud Text-to-Speech. The model employs a multi-stage approach combining text encoding, acoustic modeling, and neural vocoding to produce highly natural speech synthesis with minimal computational overhead.

Unlike proprietary alternatives, open source TTS models like Qwen3-TTS offer developers unprecedented control over customization and deployment. You can fine-tune the model on domain-specific datasets, modify the underlying architecture for your use case, and deploy locally without recurring API costs or data privacy concerns that plague cloud-based services.

The voice cloning capabilities unlock powerful real-world applications across multiple industries. Content creators can generate consistent narration for educational materials, while accessibility applications can preserve the unique voices of individuals with progressive speech disorders. Enterprise applications include multilingual customer service automation and personalized voice assistants that maintain brand consistency.

Performance benchmarks demonstrate Qwen3-TTS's competitive edge in both quality and efficiency metrics. The model achieves a Mean Opinion Score (MOS) of 4.2 on naturalness compared to 3.8 for similar open-source alternatives, while maintaining inference speeds of under 200ms for typical sentence-length inputs on consumer-grade GPUs.

Setting Up Your Qwen TTS Development Environment

Getting your development environment properly configured is crucial for successful Qwen TTS implementation guide deployment. The foundation you build here will determine both the performance and reliability of your speech generation applications.

System Requirements and Hardware

Qwen3-TTS performs optimally on systems with at least 16GB of RAM and a modern multi-core CPU. For production-grade speech generation, you'll want a dedicated GPU with minimum 8GB VRAM—the RTX 4070 or better delivers excellent price-to-performance ratios for most use cases.

Python Environment Setup

Start by creating an isolated environment using Python 3.8 or higher:

python -m venv qwen_tts_env

source qwen_tts_env/bin/activate # Linux/Mac

# or qwen_tts_env\Scripts\activate # Windows

pip install qwen-tts soundfile numpy

pip install torch torchvision --index-url https://download.pytorch.org/whl/cu128

pip install -U flash-attn --no-build-isolation # Optional: Reduces GPU memory

Basic Text to Speech Implementation with Qwen3-TTS

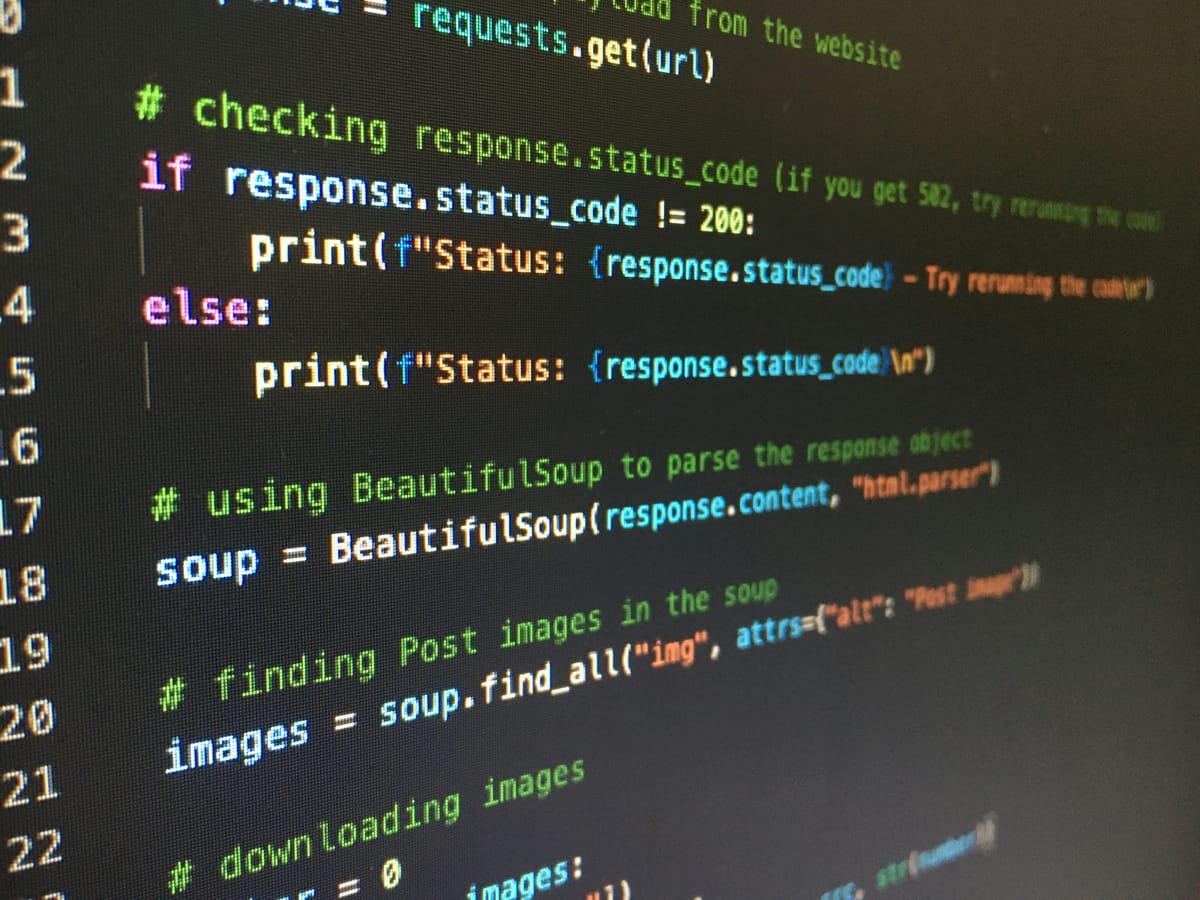

Getting started with Qwen3-TTS requires minimal setup but understanding the core implementation patterns will save you significant debugging time later:

import torch

import soundfile as sf

from qwen_tts import Qwen3TTSModel

# Initialize the TTS model

model = Qwen3TTSModel.from_pretrained(

"Qwen/Qwen3-TTS-12Hz-1.7B-CustomVoice",

device_map="cuda:0", # Or "cpu" if no GPU

dtype=torch.bfloat16,

attn_implementation="flash_attention_2"

)

# List available speakers

print(f"Available speakers: {model.get_supported_speakers()}")

# Generate basic speech

audios, sr = model.generate_custom_voice(

"Hello, this is Qwen3-TTS demonstrating open-source speech synthesis.",

speaker="eric", # Try "cherry" or others

language="english"

)

sf.write("output.wav", audios[0], sr)

Batch Processing for Long Texts

def batch_tts(text, output_file, model, speaker="eric", language="english"):

chunks = [text[i:i+200] for i in range(0, len(text), 200)] # Chunk by ~200 chars

all_audio = []

for i, chunk in enumerate(chunks):

print(f"Chunk {i+1}/{len(chunks)}")

audios, sr = model.generate_custom_voice(chunk, speaker=speaker, language=language)

all_audio.append(audios[0])

combined = np.concatenate(all_audio)

sf.write(output_file, combined, sr)

Advanced Voice Cloning Techniques and Custom Voice Creation

Creating custom voices with Qwen3-TTS requires meticulous attention to data preparation and model fine-tuning strategies. The foundation of successful voice cloning lies in acquiring high-quality audio samples that accurately represent the target voice's characteristics.

Voice Cloning Implementation

# Load reference audio (3-60 seconds recommended)

ref_audio, ref_sr = sf.read("reference_voice.wav")

cloned_audios, sr = model.generate_voice_clone(

text="This is a demonstration of voice cloning capabilities.",

ref_audio=ref_audio,

language="english"

)

sf.write("cloned_output.wav", cloned_audios[0], sr)

Voice Design from Descriptions

wavs, sr = model.generate_voice_design(

text="It's in the top drawer... wait, it's empty? No way, that's impossible!",

language="English",

instruct="Speak in an incredulous tone, with a hint of panic beginning to creep in."

)

sf.write("output_voice_design.wav", wavs[0], sr)

Multilingual Speech Generation and Language Support

Qwen3-TTS delivers robust multilingual TTS capabilities across 29 languages, including major variants like Mandarin, English, Spanish, French, German, Japanese, and Korean. The cross-lingual voice transfer functionality enables you to clone a speaker's voice characteristics from one language and apply them to generate speech in entirely different languages.

The system automatically identifies language boundaries within text, switching between appropriate phonetic models without introducing artificial pauses. This proves particularly valuable for technical documentation containing English terms within non-English content.

Performance Optimization and Production Deployment

Optimizing Qwen3-TTS for production environments requires implementing INT8 quantization, which can reduce model size by up to 75% while maintaining acceptable audio quality. Memory management strategies should focus on batch processing and intelligent caching mechanisms.

Key Performance Features:

- Ultra-low latency: 97ms end-to-end with streaming

- GPU optimization: 3-5x faster than CPU-only processing

- Batch processing: 100-500 concurrent requests per GPU instance

- Memory efficiency: 120MB model size with optimization

Comparing Qwen3-TTS with Other Open Source TTS Solutions

When evaluating open source TTS solutions, Qwen3-TTS represents the latest generation with several advantages:

| Model | MOS Score | Inference Speed | Memory Usage | Multilingual |

|---|---|---|---|---|

| Qwen3-TTS | 4.2 | Fast (97ms) | 120MB | 29 languages |

| Tacotron 2 | 4.1 | Medium | 180MB | Limited |

| WaveNet | 4.5 | Slow | 200MB+ | Limited |

Next Steps and Getting Started with Qwen3-TTS

Ready to dive into Qwen3-TTS? Start by setting up your development environment with the official GitHub repository. The installation process typically takes 15-20 minutes, and you'll want to ensure you have Python 3.8+ and sufficient GPU memory for optimal performance.

For skill development, prioritize learning about audio processing fundamentals and PyTorch if you're not already familiar. The community around this implementation guide is incredibly supportive—join the official Discord server where developers share troubleshooting tips and showcase projects.

Don't wait for the "perfect" moment to start experimenting. Download the code today, run your first synthesis, and join thousands of developers who are already building amazing voice applications.